A system is a combination of interacting elements organized to achieve one or more stated purposes. For a collection of elements to be a system, the system elements must also have enough cohesion, teleonomy, and collaboration as explained in an earlier post.

Systems are often said to have “emergent properties” [2]. Many different definitions have been suggested for emergent properties:

- “Emergence occurs when a complex entity has properties or behaviors that its parts do not have on their own, and emerge only when they interact in a wider whole”. [2]

- “Emergent properties are unexpected properties”. [3]

- “Emergent properties are new properties that occur in a system later over time from the properties of the original system”. [4]

- “Emergent properties are the properties of a complex system that characterize its emergence. In other words, these are discovered behaviors of a system that emerge spontaneously”. [5]

- “Emergence occurs when novel entities and functions appear in a system through self-organization.” [6]

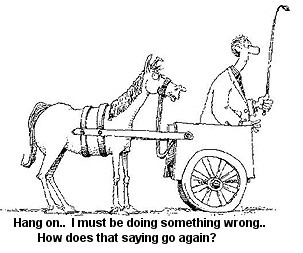

I think we often get emergence backwards. First of all, of course the parts of the system don’t have the properties that the system as a whole has. Otherwise they would be the system. But my main objection to many of the definitions, including those above, is that they suggest that the emergent properties somehow emerge or occur spontaneously and unexpectedly. This, I claim, is often not the case.

Engineered systems

A system such as an excavator is engineered to satisfy a set of system requirements. Serious engineers don’t hope for properties to emerge by putting together a bunch of random system elements. Instead they select the elements and their configuration meticulously and analyze how well the proposed system satisfies the system requirements. They then iterate the design – analysis cycle until the system requirements are satisfied, i.e., the system has the expected properties.

Artificial neural networks (ANNs) are designed by training the whole network (system) with a sufficient amount of training data to get the desired system level behavior, be it to answer questions or to detect cancer in x-ray images. The training data manifests the functional system requirements of ANNs. The individual neurons of the ANN (the system elements) adapt to play their part of the total ANN function. The ANN’s abilities don’t “emerge” from the elements; we force the elements to organize themselves to produce those abilities through backpropagation.

Evolved systems

When nature designs a system like an animal, it likewise exerts pressure on the animal species that can be interpreted as system requirements. This external pressure is propagated top-down to the system elements, the organs and other parts of the animal. The long necks of giraffes have not emerged, they have evolved because of system level requirement to reach leaves that other animals can’t reach.

An ant colony most likely has enough cohesion, collaboration and teleonomy [1] for the system level (ant colony level) requirements to influence the evolution of individual ants. The ant colony with its ant hill doesn’t therefore emerge from the behavior of the individual ants but is a product of top-down system requirements imposed on the colony and propagated to the individual ants.

A large health care system does on the other hand not have enough cohesion or teleonomy for top-down design to be effective [1]. Instead it needs to be designed using a combination of top-down and “inside-out” design:

- Create simple rules for the interactions in the system and enforce these rules.

- Design mechanisms and space for innovation and validation of innovation.

- Design ways to distribute validated innovations within the organization.

We can thus expect some emergent behavior in large health care systems. More on how to design these kinds of systems can be found in [11].

Consciousness

The brain is said to be the most complex system in the known universe. Consciousness in the form of subjective mental states (states including subjective experiences) is often claimed to be an emergent property of the information processing in the brain (the system). Another theory is that consciousness is an epiphenomenon without any causal power. Other theories are panpsychism [8] and philosophical idealism [9] but I consider these even more far-fetched than emergentism and epiphenomenalism.

I (and others) hypothesize that the capability to hold subjective mental states has emerged as an answer to evolutionary pressure, just like the giraffe’s long neck [7].

There is a fair amount of debate about the utility and nature of subjective mental states. Artificial systems such as autonomous vehicles seem to be working reasonably well without any subjective experiences. My general position is that subjective mental states are high-level internal (to the brain) representations of important real-world states, often formed through observations. The subjective attributes make the mental state more useful. High-level representations also reliably emerge in ANNs during certain types of training (presumably without the subjective experience dimension though) [10].

It seems very unlikely that the full capability to have subjective mental experiences would emerge spontaneously from some random neural activity. It is rather more likely that neural activity patterns that happened to generate proto-conscious states were selected for giving rise to more and more differentiated and useful subjective mental states.

It is more likely that subjective mental states increase the fitness of the organism, i.e., fulfill some “system requirements”. Nature seldom develops unnecessary resource-hungry capabilities. Intuitively it is rather easy to understand that pleasure, pain, taste, smell, color, and other qualia can be useful. I will save further speculations about the nature and utility of subjective mental states for future posts.

Summary

System level requirements specifying desired or adaptive system properties are the antecedent and the cause of the selection and configuration of the system elements both in artificial and natural systems (as long as the systems exhibit enough cohesion, teleonomy, and collaboration).

Most systems thus have the properties they have because they were designed that way, either by humans or by nature, not because the properties “emerged”.

When we say that a system has emergent properties we most of the time implicitly mean that we don’t understand the system well enough or haven’t been able to design it to meet well-defined system requirements. The emergent properties constitute the residual, the remaining uncertainty, when the system has been designed as well as possible.

Links

[1] What is a system. Ostrogothia blog post.

[2] Wikipedia on Emergence.

[3] Christopher W. Johnson. What are Emergent Properties and How Do They Affect the Engineering

of Complex Systems?

[4] John Van Dinther. Conversation at Quora.

[5] M Y Nikolaev and C Fortin. Systems thinking ontology of emergent properties for complex engineering systems. 2020 J. Phys.: Conf. Ser. 1687 012005

[6] Feinberg Todd E., Mallatt Jon. Phenomenal Consciousness and Emergence: Eliminating the Explanatory Gap. Frontiers in Psychology. Vol.11. 2020.

[7] Jean-Louis Dessalles. Qualia and spandrels: an engineering perspective. Technical Report ENST

2001-D-012, pp.350, 2001. ffhal-00614805.

[8] Panpsychism. Internet Encylopedia of Philosophy.

[9] Visan, Cosmin. The Archeology of Qualia. Journal Of Anthropological And Archeological Sciences 4 (5):565-569.

[10] Johnston, W.J., Fusi, S. Abstract representations emerge naturally in neural networks trained to perform multiple tasks. Nat Commun 14, 1040 (2023). https://doi.org/10.1038/s41467-023-36583-0

[11] Gary Kaplan et.al. Bringing a Systems Approach to Health.